Coffee Documentation

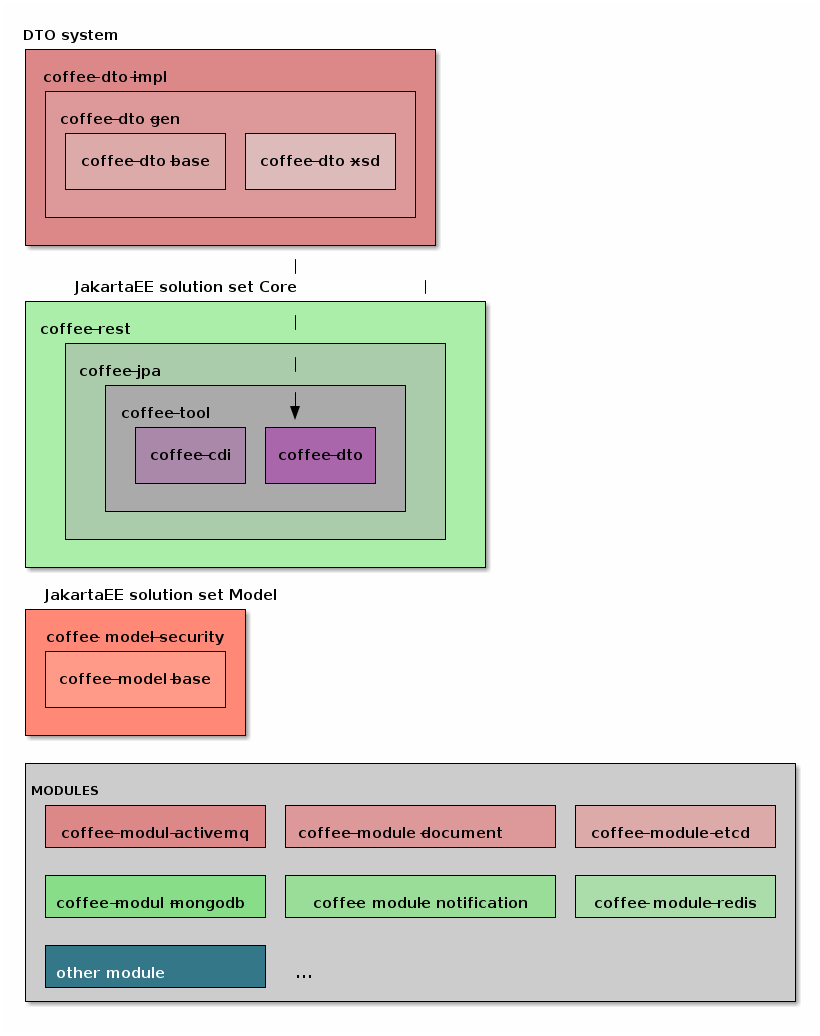

The coff:ee is a JakartaEE solution set designed to bring together common algorithms from the enterprise world, and provide a basic solution that can be tailored to our own needs if required.

Each company develops its own solution set, which tries to bring projects together, so that the same modules don’t have to be rewritten, copied and maintained.

This solution set is suitable to serve both SOA and Microservice architecture. Its architecture is modular and can be overwritten at project level for almost everything. The framework is based on the following systems, which are crucial for the whole operation:

-

Jakarta EE 10+

-

Java 11+

-

CDI 4.0+

-

Jboss logging

-

gson

-

guava

-

commons-lang3

-

apache http

-

resteasy

-

microprofile.io 6.0+

1. Architecture structure

2. Coffee Core

2.1. coffee-se

The purpose of this module is to collect classes that are as independent as possible from the Jakarta EE API.

2.1.1. coffee-se-logging

Module for logging- and MDC-related JakartaEE components without any dependencies.

Logging

Contains a basic logging system using java.util.logging.Logger.

import en.icellmobilsoft.coffee.se.logging.Logger;

private Logger log = Logger.getLogger(LogSample.class);

public String logMessage() {

log.trace("sample log message");

return "something";

}For more description and usage via CDI, see coffee-cdi/logger.

MDC

The module contains its own framework for MDC (Mapped Diagnostic Context) management.

This is because there may be different MDC solutions on specific projects (e.g. jboss, slf4j, logback…).

It is used via the static methods of the hu.icellmobilsoft.coffee.se.logging.mdc.MDC class.

Inside the MDC class, it tries to search for an MDC solution available on the classpath and delegate requests to the found class.

Currently the org.jboss.logging.MDC and org.slf4j.MDC implementations are supported,

but can be extended to the project level using the service loader module.

MDC extension

To use unsupported MDC implementations at the Coffee level, the MDCAdapter and MDCAdapterProvider

interfaces and then load the implemented MDCAdapterProvider using the service loader mechanism.

CustomMDC connection:-

Implement

MDCAdapterforCustomMDC:com.project.CustomMDCAdapterpublic class CustomMDCAdapter implements MDCAdapter { @Override public String get(String key){ //The adapter delegates its calls to our CustomMdc return CustomMDC.get(key); } } -

Implement

MDCAdapterProviderto buildCustomMDCAdapter:com.project.CustomMDCAdapterProviderpublic class CustomMDCAdapterProvider implements MDCAdapterProvider { @Override public MDCAdapter getAdapter() throws Exception;{ return new CustomMDCAdapter(); } } -

Bind

CustomMDCAdapterProvidervia service loader:META-INF/services/en.icellmobilsoft.coffee.se.logging.mdc.MDCAdapterProvidercom.project.CustomMDCAdapterProvider

MDC order

Try to list the available MDC implementations in the following order. The MDC used inside will be the first one working:

-

ServiceLoader extensions

-

org.jboss.logging.MDC -

org.slf4j.MDC. -

CoffeeMDCAdapter.-

coff:ee implementation, fallback only

-

values are stored in

ThreadLocal -

must be handled separately if you want it to be logged.

-

2.2. coffee-dto

Module designed to summarize the ancestors of the basic DTO, Adapters and Exception classes, mainly to be able to extract some common code into the Coffee jakartaEE solution set. It should not have any dependencies, except annotations serving documentation and JAXB functions.

It consists of several submodules.

2.2.1. coffee-dto-base

Contains java classes that serve as ancestors for the entire Coffee javaEE solution set.

This is where the java.time (java 8+) class for handling XSD universal adapters and basic paths to rest endpoints is served.

The module contains the basic exception classes. All other exceptions that are created, can only be derived from these.

2.2.2. coffee-dto-xsd

Its content should preferably be XSDs to serve as guides on projects. They should preferably contain very universal XSD simple and complexType, which projects can bend to their own image.

| This module does not generate DTO objects, it only adds XSDs. |

2.2.3. coffee-dto-gen

This module is used to generate the Coffee DTO. It is organized separately to be easily replaced from the dependency structure.

2.2.4. coffee-dto-impl

The module serves as a sample implementation for coffee-dto-base, coffee-dto-xsd and coffee-dto-gen.

Projects using Coffee will include it.

If the DTOs generated by Coffee do not match the target project, you will have to excelude them at this module.

By itself it is a universal, usable module.

2.3. coffee-cdi

The purpose of this module is to connect the jakarta EE, logger, deltaspike and microprofile-config.

All CDI based. Description of components and use cases below:

2.3.1. logger

Coffee uses its own logging system, for several reasons:

-

wraps the actual logging system (currently it is java util log)

-

collects all the logs that are logged at the request level, logs all logging that is logged at the logging level, including logging that is not written to the console (or elsewhere) because logging is set to a higher level (for example, if the root logger is set to INFO level, then the TRACE, DEBUG level log will not be output anywhere). We are able to log these logs in case of an error to help the debugging process

-

other information can be put into the log request level container

-

check which level of log level is the highest logged

Each class has its own logger, loggers are not inheritable, not transferable. The CDI + jboss logger provides the basis for using it:

(1)

@Inject

@ThisLogger

private hu.icellmobilsoft.coffee.cdi.logger.AppLogger log;

(2)

@Inject

private hu.icellmobilsoft.coffee.se.logging.Logger log;

(3)

import hu.icellmobilsoft.coffee.cdi.logger.LogProducer;

public static String blabla() {

LogProducer.getStaticDefaultLogger(BlaBla.class).trace("class blabla");

LogProducer.getStaticDefaultLogger("BlaBla").trace("class name blabla");

return "blabla";

}

(4)

import hu.icellmobilsoft.coffee.se.logging.Logger;

public static String blabla() {

Logger.getLogger(BlaBla.class).trace("class blabla");

Logger.getLogger("BlaBla").trace("class name blabla");

return "blabla";

}| 1 | where we work with the class in @RequestScope (or higher scope) (90% of the cases) |

| 2 | where there is no RequestScope |

| 3 | where inject is not used, e.g. in static methods |

| 4 | in JavaSE environments e.g. cliens jars |

If a parameter is included in the logging, it should be placed between "[parameter]" characters EXCEPT.

log.trace("Generated id: [{0}]", id);The purpose of this is to be able to immediately identify the variable value when looking at the log, and also if it has a value of "" (empty String) or null.

2.3.2. config

As a configuration solution we use the microprofile-config solution.

|

microprofile.io https://github.com/eclipse/microprofile-config http://mirrors.ibiblio.org/eclipse/microprofile/microprofile-config-1.3/microprofile-config-spec.pdf |

In short, what is it? You can specify configuration parameters in a wide range of ways. It is not enough to burn a configuration parameter into some properties file, because it may have a different value from environment to environment. The values can be specified at a separate level, be it ETCD, properties, system property, environment property or whatever. The microprofile-config can look up a given key from all available sources and use the highest priority value. Basic use cases:

-

in code - when not using CDI container, static methods and other cases

-

static - at runtime of the program all

-

dynamic - the key value is searched for dynamically, each time it is used. configuration changes can be made without restarting (for example, setting some system property at runtime)

import org.eclipse.microprofile.config.inject.ConfigProperty;

import org.eclipse.microprofile.config.ConfigProvider;

import javax.inject.Provider;

(1)

public String kodban() {

Config config = ConfigProvider.getConfig();

String keyValue = config.getValue("key", String.class);

return keyValue;

}

(2)

@Inject

@ConfigProperty(name="key")

String keyValue;

public String statikusan() {

return keyValue;

}

(3)

@Inject

@ConfigProperty(name="key")

Provider<String> keyValue;

public String dynamic() {

return keyValue.get();

}| 1 | value retrieved in code |

| 2 | static value input |

| 3 | dynamic value retrieval |

2.3.3. trace

The annotations in the hu.icellmobilsoft.coffee.cdi.trace.annotation package allow the modules of coff::ee to provide trace information. The annotations are used to allow coff::ee modules to plug into an existing trace flow or to start a new flow.

The internal logic of coffee trace implementation is independent and selectable within the projects. If no trace implementation is selected, then by default, no trace propagation occurs in the system.

-

Trace usage

-

The

@Tracedannotation allows a method to become traceable.-

SpanAttribute - links the span data of coff:ee modules to the values of mp-opentracing or by mp-telemetry

-

component - module identifier that is part of the trace, e.g. redis-stream

-

kind - specify type of span, e.g. CONSUMER (default INTERNAL)

-

dbType - database type, e.g. redis

-

-

-

...

@Inject

private ITraceHandler traceHandler;

...

public Object execute(CdiQueryInvocationContext context) {

//create jpa query ...

Traced traced = new Traced.Literal(SpanAttribute.Database.COMPONENT, SpanAttribute.Database.KIND, SpanAttribute.Database.DB_TYPE);

String operation = context.getRepositoryClass() + "." + method.getName();

return traceHandler.runWithTrace(() -> context.executeQuery(jpaQuery), traced, operation);

}@Traced(component = SpanAttribute.Redis.Stream.COMPONENT, kind = SpanAttribute.Redis.Stream.KIND, dbType = SpanAttribute.Redis.DB_TYPE)

@Override

public void onStream(StreamEntry streamEntry) throws BaseException {

...

}2.3.4. Metrics

The internal logic of coffee has a metric implementation that is independent

and selectable within projects.

If no metric implementation is selected, then the default

Noop*MetricsHandler is activated in the system.

The choice of implementations can be found in the coffee-module-mp-metrics/micrometer documentation.

2.4. coffee-configuration

The purpose of this module is to complement the Microprofile config, e.g. by caching

// for limited use where speed and high transaction count are not important,

// in such cases it is worth choosing one of the other methods

@Inject

@ConfigProperty(name="key")

Provider<String> keyValue;

// traditional microprofile-config query

public String kodban() {

Config config = ConfigProvider.getConfig();

String keyValue = config.getValue("key", String.class);

return keyValue;

}2.4.1. ConfigurationHelper class

This class allows you to query type specific configurations.

@Inject

private ConfigurationHelper configurationHelper;

...

Integer ttl = configurationHelper.getInteger("public.login.session.token.validity");2.4.2. ApplicationConfiguration class

Similar to the ConfigurationHelper class, but uses @ApplicationScope level caching, where cached values are stored for 30 minutes. It allows these cached values to be immediately which can be used to build additional logic (e.g. change the value externally in ETCD), and then topic JMS to forget the values and immediately read them again).

@Inject

private ApplicationConfiguration applicationConfiguration;

public String kodban() {

String minVersion = applicationConfiguration.getString(EtcdVal.KEY_PUBLIC_INVOICE_MIN_REQUEST_VERSION);

return minVersion;

}2.5. coffee-tool

The purpose of this module is to collect basic util classes.

All date, String, Number or other static classes should be placed here.

MavenURLHandler-

Auxiliary class for XSD Catalog usage, allows to

maven:hu.icellmobilsoft.coffee.dto.xsd:coffee-dto-xsd:jar::!/xsd/en/icellmobilsoft/coffee/dto/common/common.xsd

URL handling in the code. It needs to be activated separately, so it’s not a complication (but it’s not activated either).

AesGcmUtil-

AES 256 GCM encryption and decryption helper class. The cipher is based on

AES/GCM/NoPadding, the key must be 256 bits long, the IV is 12 bytes long. Includes helper methods for key and IV generation.Example usage:

String inputText = "test input test"; byte[] key = AesGcmUtil.generateKey(); //B64: HMTpQ4/aEDKoPGMMqMtjeTJ2s26eOEv1aUrE+syjcB8= byte[] iv = AesGcmUtil.generateIv(); //B64: 5nqOVSjoGYk/oSwj byte[] encoded = AesGcmUtil.encryptWithAes256GcmNoPadding(key, inputText.getBytes(StandardCharsets.UTF_8), iv); String encodedB64 = Base64.getEncoder().encodeToString(encoded); //fRCURHp5DWXtrESNHMo1DUoAcejvKDu9Y5wd5zXblg== byte[] decoded = AesGcmUtil.decryptWithAes256GcmNoPadding(key, encoded, iv); String decodedString = new String(decoded, StandardCharsets.UTF_8); //test input testGiven key-IV pair must not be reused! For this reason, encrypt/decrypt without IV should only be called with single-use keys! JsonUtil-

JsonUtil is a kind of wrapper of Gson

example - deserialization of generic typeString paramString = "[{\"key\":\"testTitleKey\",\"value\":\"testTitleValue\"}]"; Type paramListType = new TypeToken<List<ParameterType>>() {}.getType(); List<ParameterType> list = JsonUtil.toObjectUncheckedEx(paramString, paramListType); System.out.println(list.get(0).getKey());

2.6. coffee-jpa

The purpose of this module is to connect the JPA.

Includes the deltaspike jpa and hibernate hookup. It contains the paging helper classes, transaction handling classes, and the ancestor of all *Services, the BaseService class.

2.6.1. TransactionHelper

Contains helper methods with @Transactional annotations for @FunctionalInterfaces declared in the FunctionalInterfaces class.

Thus, it is possible that if the first entry point of a class is not in a transaction, but we want to run a code snippet in a transaction within it, we just need to highlight the desired snippet in a private method or in any @FunctionalInterface provided by coffee and call the corresponding method of TransactionHelper, which will perform the transactional execution.

This avoids the need to highlight the logic to be run in the transaction in a public method with @Transactional annotation and call the method created inside the class via CDI.current() or to do the same by highlighting it in a separate class.

import jakarta.enterprise.inject.Model;

import jakarta.inject.Inject;

import hu.icellmobilsoft.coffee.dto.exception.BaseException;

import hu.icellmobilsoft.coffee.dto.exception.InvalidParameterException;

import hu.icellmobilsoft.coffee.jpa.helper.TransactionHelper;

@Model

public class TransactionHelperExample {

@Inject

private InvoiceService invoiceService;

@Inject

private TransactionHelper transactionHelper;

public void example(Invoice invoice) throws BaseException {

if (invoice == null) {

throw new InvalidParameterException("invoice is NULL!");

}

// operations outside the transaction

// ...

// BaseExceptionFunction running in transaction

transactionHelper.executeWithTransaction(invoiceService::save, invoice);

// in-transaction BaseExceptionRunner (e.g.: for void method)

transactionHelper.executeWithTransaction(() -> saveInvoice(invoice));

// operations outside the transaction

// ...

}

private void saveInvoice(Invoice invoice) throws BaseException {

invoiceService.save(invoice);

}

}2.6.2. BatchService

BatchService is used to perform bulk database operations (insert, update, delete) in a group.

It mainly contains batch operations based on PreparedStatement, in which SQL compilation is performed with the support of Hibernate.

Type support

BatchService supports operations on the following types with certainty:

| Support type | Java types |

|---|---|

Null treated as value |

All types evaluated as null by hibernate. |

CustomType treated as type |

Currently, there is no specific type, it is used due to the possibility of extending |

Enum treated as type |

All enum types. |

ManyToOneType treated as type |

All types annotated with @ManyToOne. |

ConvertedBasicType treated as type |

All types with a converter. |

BasicType treated as a type |

boolean, Boolean |

char, Character |

|

java.sql.Date, java.sql.Time, java.sql.Timestamp |

|

java.util.Date, java.util.Calendar |

|

LocalDate, LocalTime, LocalDateTime, OffsetTime, OffsetDateTime, ZonedDateTime, Instant |

|

Blob, byte[], Byte[] |

|

Managed by JDBC driver |

byte, short, int, long, float, double |

byte, short, integer, long, float, double |

|

BigInteger, BigDecimal |

|

String |

|

All other types not in the list. |

| For special types it is recommended to use a custom converter. |

| Types not listed here are handled by the specific JDBC driver. |

| Type support has been tested on postgresql and oracle databases to ensure correct operation. For all other databases, type coverage is in theory mostly guaranteed, but anomalies may occur. |

Null value handling

BatchService starts type handling with a null scan. If the value of any type returned by hibernate is null, BatchService sets the value as SqlTypes.NULL JDBC type using the PreparedStatement.setNull() method.

| JDBC drivers also use this method. |

CustomType handling

BatchService currently does not handle any CustomType directly, but it provides the possibility to extend it. By default, all CustomType types are handled by the JDBC driver used!

Enum types handling

BatchService handles the resolution of Enum types as follows:

| Java code | value inserted by BatchService |

|---|---|

|

The order associated with the given enum value, the value is inserted according to the ordinal() method. |

|

The order associated with the given enum value, the value is inserted according to the ordinal() method. |

|

The name associated with the given non-value, the value is inserted according to the name() method. |

ManyToOneType handling

Within an entity, fields that use the @ManyToOne (jakarta.persistence.ManyToOne) annotation are treated by hibernate as ManyToOneType.

These ManyToOneType types are handled by BatchService as follows:

| Java code | value inserted by BatchService |

|---|---|

|

The BatchService takes the unique identifier of the given entity and injects it using EntityHelper.getLazyId(). |

ConvertedBasicType handling

Fields within an entity that have a converter, i.e. have the @Convert (jakarta.persistence.Convert) annotation placed on them, are treated by hibernate as ConvertedBasicType.

@Convert(converter = YearMonthAttributeConverter.class)

@Column(name = "YEAR_MONTH")

private YearMonth yearMonth;For this type, Hibernate contains the JDBC type and all additional settings for the converted value, but the conversion must be done manually. Thus, the BatchService calls the passed converter, and then passes it on to the BasicType management process with the value obtained during the conversion and the ConvertedBasicType (BasicType) type set appropriately by hibernate.

BasicType handling

The BasicType type combines the java and jdbc types, so for each java type it contains the corresponding jdbc type. The separations are thus done according to the jdbc type code stored in the jdbc type.

Date type BasicType handling

SqlTypes.DATE BasicType types with a jdbc type code SqlTypes.DATE are handled by BatchService as follows:

| Java code | value inserted by BatchService |

|---|---|

|

Can be set directly, without conversion, using the PreparedStatement.setDate() method. |

|

Converted to java.sql.Date type, then set using PreparedStatement.setDate() method. |

|

Converted to java.sql.Date type, then set using PreparedStatement.setDate() method. |

|

Converted to java.sql.Date type, then set using PreparedStatement.setDate() method. |

| Types not in the table are set by the JDBC driver. |

Time type BasicType handling

The SqlTypes.TIME and SqlTypes.TIME_WITH_TIMEZONE jdbc type code BasicType types are handled by BatchService as follows:

| Java code | value inserted by BatchService |

|---|---|

|

Can be set directly, without conversion, using the PreparedStatement.setTime() method. |

|

Converted to java.sql.Time type, then set using PreparedStatement.setTime() method. |

|

The system returned by ZoneId.systemDefault() is converted to a time zone, then converted to java.sql.Time and set using the PreparedStatement.setTime() method. |

|

Converted to java.sql.Time type, then set using PreparedStatement.setTime() method. |

|

Converted to java.sql.Time type, then set using PreparedStatement.setTime() method. |

For the types listed in the table, if hibernate.jdbc.time_zone is set in persistence.xml, then the time zone is also passed to the PreparedStatement.setTime() method, so that the JDBC driver can perform the appropriate time offset according to the time zone.

| It is up to the JDBC driver to set the types not listed in the table. |

Timestamp type BasicType handling

The SqlTypes.TIMESTAMP, SqlTypes.TIMESTAMP_UTC and SqlTypes.TIMESTAMP_WITH_TIMEZONE jdbc type code BasicType types are handled by BatchService as follows:

| Java code | value inserted by BatchService |

|---|---|

|

Can be set directly, without conversion, using the PreparedStatement.setTimestamp() method. |

|

Converted to java.sql.Timestamp type, then set using PreparedStatement.setTimestamp() method. |

|

The system returned by ZoneId.systemDefault() is converted to a time zone, then converted to java.sql.Timestamp type, and set using PreparedStatement.setTimestamp() method. |

|

The system returned by ZoneId.systemDefault() is converted to a time zone, then converted to java.sql.Timestamp type and set using PreparedStatement.setTimestamp() method. |

|

The system time zone returned by ZoneId.systemDefault() is converted to java.sql.Timestamp and then set using PreparedStatement.setTimestamp(). |

|

Converted to java.sql.Timestamp type, then set using PreparedStatement.setTimestamp() method. |

|

Converted to java.sql.Timestamp type, then set using PreparedStatement.setTimestamp() method. |

|

Converted to java.sql.Timestamp type, then set using PreparedStatement.setTimestamp() method. |

|

Converted to java.sql.Timestamp type, then set using PreparedStatement.setTimestamp() method. |

For the types listed in the table, if hibernate.jdbc.time_zone is set in persistence.xml, then the time zone is also passed to the PreparedStatement.setTimestamp() method, so that the JDBC driver can perform the appropriate time offset according to the time zone.

| It is up to the JDBC driver to set the types not listed in the table. |

Boolean type BasicType handling

BasicType types with SqlTypes.BOOLEAN jdbc type code are handled by BatchService as follows:

| Java code | value inserted by BatchService |

|---|---|

|

Can be set directly, without conversion, using the PreparedStatement.setBoolean() method. |

|

Can be set directly, without transformation, using the PreparedStatement.setBoolean() method. |

| For types not listed in the table, it is up to the JDBC driver to set them. |

Char type BasicType handling

BasicType types with SqlTypes.BOOLEAN jdbc type code are handled by BatchService as follows:

| Java Code | value inserted by BatchService |

|---|---|

|

Converted to String type, then set using PreparedStatement.setString() method. |

|

Converted to String type, then set using PreparedStatement.setString() method. |

| Types not listed in the table are set by the JDBC driver. |

Data type BasicType handling

The SqlTypes.BLOB, SqlTypes.VARBINARY and SqlTypes.LONGVARBINARY jdbc type code BasicType types are handled by BatchService as follows:

| Java code | value inserted by BatchService | ||

|---|---|---|---|

|

Converted to InputStream type, then set using PreparedStatement.setBinaryStream() method. |

||

|

Can be set directly, without conversion, using the PreparedStatement.setBytes() method. |

||

|

If the legacy array handling is enabled:

If the legacy array handling is not enabled:

|

||

|

Can be set directly, without conversion, using the PreparedStatement.setBytes() method. |

||

|

If the legacy array handling is enabled:

If the legacy array handling is not enabled:

|

| The JDBC driver is responsible for setting types not included in the table. |

|

To enable legacy array handling, |

2.6.3. microprofile-health support

The DatabaseHealth can check if the database is reachable. The DatabasePoolHealth can check how loaded the connection pool used for operations to the database is.

This function is based on metric data, so it is necessary that one of the implementations is activated.

<dependency>

<groupId>hu.icellmobilsoft.coffee</groupId>

<artifactId>coffee-module-mp-micrometer</artifactId> (1)

</dependency>

<!-- or -->

<dependency>

<groupId>hu.icellmobilsoft.coffee</groupId>

<artifactId>coffee-module-mp-metrics</artifactId> (2)

</dependency>| 1 | Micrometer metric implementation |

| 2 | Microprofile-metrics metric implementation |

@ApplicationScoped

public class DatabaseHealthCheck {

@Inject

private DatabaseHealth databaseHealth;

@Inject

private Config mpConfig;

public HealthCheckResponse checkDatabaseConnection() {

DatabaseHealthResourceConfig config = new DatabaseHealthResourceConfig();

config.setBuilderName("oracle");

config.setDatasourceUrl("jdbc:postgresql://service-postgredb:5432/service_db?currentSchema=service");

String datasourceName = mpConfig.getOptionalValue(IConfigKey.DATASOURCE_DEFAULT_NAME, String.class)

.orElse(IConfigKey.DATASOURCE_DEFAULT_NAME_VALUE);

config.setDsName(datasourceName);

try {

return databaseHealth.checkDatabaseConnection(config);

}catch (BaseException e) {

// need to be careful with exceptions, because the probe check will fail if we don't handle the exception correctly

return HealthCheckResponse.builder().name("oracle").up().build();

}

}

@Produces

@Startup

public HealthCheck produceDataBaseCheck() {

return this::checkDatabaseConnection;

}

}@ApplicationScoped

public class DatabaseHealthCheck {

@Inject

private DatabaseHealth databaseHealth;

public HealthCheckResponse checkDatabasePoolUsage() {

try {

return databasePoolHealth.checkDatabasePoolUsage("oracle");

}catch (BaseException e) {

return HealthCheckResponse.builder().name("oracle").up().build();

}

}

@Produces

@Readiness

public HealthCheck produceDataBasePoolCheck() {

return this::checkDatabasePoolUsage;

}

}2.7. coffee-rest

Module designed for REST communication and management.

It includes the apache http client, various REST loggers and filters. It also contains the language, REST activator and Response util class.

2.7.1. BaseRestLogger

This class is used to log HTTP request-response requests to the application. It is activated manually at the project level using the following pattern:

package hu.icellmobilsoft.project.common.rest.logger;

import javax.inject.Inject;

import javax.ws.rs.ext.Provider;

import hu.icellmobilsoft.coffee.cdi.logger.AppLogger;

import hu.icellmobilsoft.coffee.cdi.logger.ThisLogger;

import hu.icellmobilsoft.coffee.rest.log.BaseRestLogger;

import hu.icellmobilsoft.coffee.rest.log.LogConstants;

@Provider (1)

public class RestLogger extends BaseRestLogger {

@Inject

@ThisLogger

private AppLogger log;

@Override

public String sessionKey() { (2)

return LogConstants.LOG_SESSION_ID;

}

}| 1 | JAX-RS activator (this is the thing that activates it) |

| 2 | Session ID key name in HTTP header |

The HTTP request-response log itself is compiled by the hu.icellmobilsoft.coffee.rest.log.RequestResponseLogger class, and can be used in other situations if needed, for example logging an error.

During request logging, sensitive data is masked both from the headers and from the json/xml body (e.g. X-PASSWORD: 1234 instead of X_PASSWORD: *).

The data is determined whether it is to be protected based on its key (key for headers and JSON content, tag for XML), which by default is a key corresponding to the regexes [\w\s]*?secret[\w\s]*? or [\w\s]*?pass[\w\s]*? (e.g. userPassword, secretToken, …),

if needed in the project, the regex can be overwritten by specifying the configuration coffee.config.log.sensitive.key.pattern in one of the default microprofile-config sources (sys var, env var, META-INF/microprofile-config.properties), multiple patterns can be specified separated by commas.

2019-02-01 16:31:33.044 INFO [thread:default task-1] [hu.icellmobilsoft.coffee.rest.log.BaseRestLogger] [sid:2G7XOSOJBCFRMW08] - * Server in-bound request

> POST http://localhost:8083/external/public/sampleService/sample/interface

> -- Path parameters:

> -- Query parameters:

> -- Header parameters:

> accept: text/xml;charset=utf-8

> Connection: keep-alive

> Content-Length: 106420

> content-type: text/xml;charset=utf-8

> Host: localhost:8083

> User-Agent: Apache-HttpClient/4.5.3 (Java/1.8.0_191)

> X-Client-Address: 10.10.20.49

> X-CustomerNumber: 10098990

> X-Password: *

> X-UserName: sample

>

> entity: [<?xml version="1.0" encoding="UTF-8"?>

<SampleRequest xmlns="http://schemas.nav.gov.hu/OSA/1.0/api">

<header>

<requestId>RID314802331803</requestId>

<timestamp>2019-02-01T15:31:32.432Z</timestamp>

<requestVersion>1.1</requestVersion>

<headerVersion>1.0</headerVersion>

</header>

<user>

<passwordHash>*</passwordHash>

... // röviditve2019-02-01 16:31:34.042 INFO [thread:default task-1] [hu.icellmobilsoft.coffee.rest.log.BaseRestLogger] [sid:2G7XOSOJBCFRMW08] - < Server response from [http://localhost:8083/external/public/sampleService/sample/interface]:

< Status: [200], [OK]

< Media type: [text/xml;charset=UTF-8]

< -- Header parameters:

< Content-Type: [text/xml;charset=UTF-8]

< entity: [{"transactionId":"2G7XOSYJ6VUEJJ09","header":{"requestId":"RID314802331803","timestamp":"2019-02-01T15:31:32.432Z","requestVersion":"1.1","headerVersion":"1.0"},"result":{"funcCode":"OK"},"software":{"softwareId":"123456789123456789","softwareName":"string","softwareOperation":"LOCAL_SOFTWARE","softwareMainVersion":"string","softwareDevName":"string","softwareDevContact":"string","softwareCountryCode":"HU","softwareDescription":"string"}]2.7.2. Optimized BaseRestLogger

This class works similarly as the BaseRestLogger. The only difference is that it uses less memory, because it doesn’t copy the streams of the request and response entities for logging, but collects the entities while reading and writing them.

It is activated manually at the project level using the following pattern:

package hu.icellmobilsoft.project.common.rest.logger;

import javax.inject.Inject;

import javax.ws.rs.ext.Provider;

import hu.icellmobilsoft.coffee.cdi.logger.AppLogger;

import hu.icellmobilsoft.coffee.cdi.logger.ThisLogger;

import hu.icellmobilsoft.coffee.dto.common.LogConstants;

import hu.icellmobilsoft.coffee.rest.log.optimized.BaseRestLogger;

@Provider (1)

public class RestLogger extends BaseRestLogger {

@Inject

@ThisLogger

private AppLogger log;

@Override

public String sessionKey() { (2)

return LogConstants.LOG_SESSION_ID;

}

}| 1 | JAX-RS activator (this is the thing that activates it) |

| 2 | Session ID key name in HTTP header |

The HTTP request-response log itself is compiled by the hu.icellmobilsoft.coffee.rest.log.optimized.RequestResponseLogger class, with the temprorary @Named("optimized_RequestResponseLogger") annotation. The request and response entity log limits are determined here according to whether the request or response entity is application/octet-stream or multipart/form-data and the REST interface is not annotated with the LogSpecifier then we limit the log size.

2.7.3. LogSpecifier

REST logging can be customized per endpoint with the hu.icellmobilsoft.coffee.rest.log.annotation.LogSpecifier annotation,

this can be specified multiple times in one place, and its scope can be limited by the target field,

field, of which more than one can be specified in the annotation (activated by default for all targets);

this gives the possibility to customize REST request-response, microprofile-client request-response separately.

Only one LogSpecifier per endpoint LogSpecifierTarget can be used.

|

Specifiable targets are enum values of hu.icellmobilsoft.coffee.rest.log.annotation.enumeration.LogSpecifierTarget:

| LogSpecifierTarget | Scope |

|---|---|

|

REST endpoint request |

|

REST endpoint response-a |

|

Microprofile REST Client endpoint request |

|

Microprofile REST Client endpoint response-a |

Currently the LogSpecifier is prepared for the following cases:

-

logging of the request-response on the endpoint can be disabled with the

noLogoption of theLogSpecifierannotation. -

on the endpoint, the size of the logged body can be limited by the

maxEntityLogSizefield of theLogSpecifierannotation.

if maxEntityLogSize is set to a value other than LogSpecifier.NO_LOG, then only the first 5000 characters of the request will be written for the application/octet-stream mediaType received by the REST endpoint.

|

When using the optimized BaseRestLogger class, if the LogSpecifier annotation is not specified, then in the case of application/octet-stream és multipart/form-data mediaTypes, only the first 5000 characters of the request and response entities are logged.

|

@POST

@Produces({ MediaType.APPLICATION_JSON, MediaType.TEXT_XML, MediaType.APPLICATION_XML })

@Consumes({ MediaType.APPLICATION_JSON, MediaType.TEXT_XML, MediaType.APPLICATION_XML })

@LogSpecifier(target={LogSpecifierTarget.REQUEST, LogSpecifierTarget.CLIENT_REQUEST}, maxEntityLogSize = 100) (1)

@LogSpecifier(target=LogSpecifierTarget.RESPONSE, maxEntityLogSize = 5000) (2)

@LogSpecifier(target=LogSpecifierTarget.CLIENT_RESPONSE, noLog = true) (3)

WithoutLogResponse postWithoutLog(WithoutLogRequest withoutLogRequest) throws BaseException;| 1 | Request entity log size is limited to 100 bytes, also for REST calls and microprofile client usage |

| 2 | Response entity log size limited to 5000 characters for REST calls |

| 3 | Disables response logging for microprofile rest client responses. |

LogSpecifiersAnnotationProcessor

The LogSpecifier is associated with hu.icellmobilsoft.coffee.rest.log.annotation.processing.LogSpecifiersAnnotationProcessor,

whose purpose is to prevent multiple values from being defined for the same target due to the redefinability of LogSpecifier.

To do this, it checks at compile time how many @LogSpecifier have been defined per LogSpecifierTarget, if it finds more than one, it fails the compilation.

@POST

@Produces({ MediaType.APPLICATION_JSON, MediaType.TEXT_XML, MediaType.APPLICATION_XML })

@Consumes({ MediaType.APPLICATION_JSON, MediaType.TEXT_XML, MediaType.APPLICATION_XML })

@LogSpecifier(maxEntityLogSize = 100) (1)

@LogSpecifier(target = LogSpecifierTarget.RESPONSE, maxEntityLogSize = 5000) (2)

ValidatorResponse postValidatorTest(ValidatorRequest validatorRequest) throws BaseException;| 1 | Since no target is specified, the log size of each entity is limited to 100 bytes/character, including LogSpecifierTarget.RESPONSE. |

| 2 | LogSpecifierTarget.RESPONSE limits entity log size to 5000 characters. |

Since in the above example the size of the REST response should be 100 for the first annotation and 5000 for the second annotation, to avoid hidden logic the LogSpecifiersAnnotationProcessor will fail the translation with the following error:

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-compiler-plugin:3.8.1:compile (default-compile) on project project-sample-service: Compilation failure

[ERROR] .../project-sample-service/src/main/java/hu/icellmobilsoft/project/sample/service/rest/ISampleTestRest.java:[43,23] Multiple LogSpecifiers are defined for the [RESPONSE] of [postValidatorTest]! Conflicting LogSpecifiers:[[@hu.icellmobilsoft.coffee.rest.log.annotation.LogSpecifier(noLog=false, maxEntityLogSize=100, target={REQUEST, RESPONSE, CLIENT_REQUEST, CLIENT_RESPONSE}), @hu. icellmobilsoft.coffee.rest.log.annotation.LogSpecifier(noLog=false, maxEntityLogSize=5000, target={RESPONSE})]]2.7.4. JaxbTool

The purpose of this class is to summarize the transformations and manipulations related to XML objects. Its structure is fully modular, you can customize everything to your project’s needs using the CDI. Its modules provide this functionality by default:

Request version determination

This is provided by the IRequestVersionReader interface.

It implements a built-in and replaceable class hu.icellmobilsoft.coffee.rest.validation.xml.reader.XmlRequestVersionReader.

Based on the pattern of the

...<header>...<requestVersion>1.1</requestVersion>...</header>...XML structure, of course you are free to modify it to another structure or even read the HTTP header.

XSD error collection

In the case of marshal (DTO → XML String) or unmarshal (XML String/Stream → DTO), you can request a check according to XSD.

In this case hu.icellmobilsoft.coffee.rest.validation.xml.exception.XsdProcessingException

to get a list of errors that violate XSD rules.

These errors are handled and provided by the IXsdValidationErrorCollector interface.

The implementing built-in and replaceable class is hu.icellmobilsoft.coffee.rest.validation.xml.error.XsdValidationErrorCollector.

XSD (schema) file handling

Additional logic is required to handle XSD schema description files, since they can have various bindings.

This problem is addressed by the IXsdResourceResolver interface.

The implementor is a built-in and interchangeable class hu.icellmobilsoft.coffee.rest.validation.xml.utils.XsdResourceResolver.

The basic problem that XSDs import each other in a common directory is also a basic problem,

but being able to import XSDs from another project requires extra logic.

In this class this situation is handled.

2.7.5. XSD Catalog schema management

The description in XSD Catalog and generation deals with XSD generation. This section focuses on the activation in the code - XML validation using XSD catalog.

The whole function is performed by the JaxbTool class.

It is intentionally built in a modular way so that it can be easily adapted to needs.

As described above, Coffee includes an implementation of IXsdResourceResolver,

that can read the schema structure specified in the XSD Catalog.

This class is called

@Alternative

@Priority(100)

public class PublicCatalogResolver implements LSResourceResolver, IXsdResourceResolver {Since we use maven-bound dependencies to generate the XSD Catalog, such as:

...

<public publicId="http://common.dto.coffee.icellmobilsoft.hu/common" uri="maven:hu.icellmobilsoft.coffee.dto.xsd:coffee-dto-xsd:jar::!/xsd/hu.icellmobilsoft.coffee/dto/common/common.xsd"/>

...So you need to be prepared to manage the maven: URI protocol.

This is done in the hu.icellmobilsoft.coffee.tool.protocol.handler.MavenURLHandler class,

which needs to be activated.

This can be done in several ways, the recommended solution is the following:

hu.icellmobilsoft.coffee.rest.validation.catalog.MavenURLStreamHandlerProviderSo you need to create the file src/main/resources/META-INF/services/java.net.spi.URLStreamHandlerProvider

and include the class that handles it (Coffee part).

There may be systems (e.g. Thorntail),

which are not able to read this file in time for the application to run.

In such cases, there is another option via URL.setURLStreamHandlerFactory(factory);.

|

Catalog JaxbTool activation

After the maven: URI protocol handling setup, there are only 2 things left to do:

-

activate

PublicCatalogResolver -

Specify catalog file

Activating PublicCatalogResolver is done in the classic CDI way:

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://xmlns.jcp.org/xml/ns/javaee" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://xmlns.jcp.org/xml/ns/javaee http://www.oracle.com/webfolder/technetwork/jsc/xml/ns/javaee/beans_1_1.xsd"

version="1.1" bean-discovery-mode="all">

<alternatives>

<class>hu.icellmobilsoft.coffee.rest.validation.catalog.PublicCatalogResolver</class>

</alternatives>

</beans>And the catalog xsd file is specified via the configuration, more specifically the key

coffee.config.xml.catalog.path

here is an example:

coffee:

config:

xml:

catalog:

path: xsd/hu/icellmobilsoft/project/dto/super.catalog.xmlAfter that we are ready and the XSD Catalog will do the XSD schema reading.

2.7.6. Json support

The framework supports JSON format messages in addition to XML for REST communication.

To serialize/deserialize these messages, it uses an external module, Gson, maintained by Google The framework complements/upgrades Gson with some custom adapters.

Below is an example JSON, and its own added adapters.

The ISO 8601 standard is used for the time-related values, except in one case.

In the case of the Date

{

"date": 1549898614051,

"xmlGregorianCalendar": "2019-02-11T15:23:34.051Z",

}, "bytes": "dGVzdFN0cmluZw==",

"string": "test1",

"clazz": "hu.icellmobilsoft.coffee.tool.gson.JsonUtilTest",

"offsetDateTime": "2019-02-11T15:23:34.051Z",

"offsetTime": "15:23:34.051Z",

"localDate": "2019-02-11",

"duration": "P1Y1M1DT1H1M1S"

}| Java type | Format |

|---|---|

|

Return value of the |

|

Return value of the |

|

Returns the time since 1970-01-01T00:00:00.000 in milliseconds. |

|

Return value of the |

|

Return value of the method |

|

Return value of the |

|

Return value of |

|

Return value of |

Note: Most of the JSON-related operations are of a utility nature and are publicly available under coffee-tool in the JsonUtil class.

2.7.7. OpenApiFilter

Microprofile OpenApi provides the ability to set additional OpenApi configuration via the implementation of the org.eclipse.microprofile.openapi.OASFilter interface.

The implementation of hu.icellmobilsoft.coffee.rest.filter.OpenAPIFilter contains within the project the generic error codes related to coffee error handling and the corresponding response objects, which are generally applied to all endpoints crossed by the filter, providing more accurate documentation compared to the openapi.yml config file written in microservices using coffee, since this information is dynamically loaded.

To activate this filter in the configuration, you need to specify mp.openapi.filter in the configuration key hu.icellmobilsoft.coffee.rest.filter.OpenAPIFilter, which is the class that implements it.

Example in a microprofile default properties config:

mp.openapi.filter=hu.icellmobilsoft.coffee.rest.filter.OpenAPIFilterCustomizability

The implementation can be further refined by adding a mapping, of which an example is given:

package hu.icellmobilsoft.test.rest.filter;

...

@Vetoed

public class CustomerOpenAPIFilter extends OpenAPIFilter {

private static final String CUSTOM_999_RESPONSE = "#/components/schemas/Custom999Response";

@Override

protected Map<Integer, APIResponse> getCommonApiResponseByStatusCodeMap() { (1)

Map<Integer, APIResponse> apiResponseByStatusCodeMap = super.getCommonApiResponseByStatusCodeMap();

APIResponse customApiResponse = OASFactory.createAPIResponse() //

.content(OASFactory.createContent()

.addMediaType(MediaType.APPLICATION_JSON,

OASFactory.createMediaType().schema(OASFactory.createSchema().ref(CUSTOM_999_RESPONSE)))

.addMediaType(MediaType.APPLICATION_XML,

OASFactory.createMediaType().schema(OASFactory.createSchema().ref(CUSTOM_999_RESPONSE)))

.addMediaType(MediaType.TEXT_XML,

OASFactory.createMediaType().schema(OASFactory.createSchema().ref(CUSTOM_999_RESPONSE))))

.description(Response.Status.BAD_REQUEST.getReasonPhrase() //

+ "\n" + "* Custom 999 error" //

+ "\n\t **resultCode** = *OPERATION_FAILED*" //

);

apiResponseByStatusCodeMap.put(999,customApiResponse );

return apiResponseByStatusCodeMap;

}

@Override

protected List<Parameter> getCommonRequestHeaderParameters() { (2)

Parameter xCustomHeader1 = OASFactory.createObject(Parameter.class).name("X-CUSTOM-HEADER-1").in(Parameter.In.HEADER).required(false)

.description("Description of custom header 1").schema(OASFactory.createObject(Schema.class).type(Schema.SchemaType.STRING));

Parameter xCustomHeader2 = OASFactory.createObject(Parameter.class).name("X-CUSTOM-HEADER-2").in(Parameter.In.HEADER).required(false)

.description("Description of custom header 2").schema(OASFactory.createObject(Schema.class).type(Schema.SchemaType.STRING));

List<Parameter> headerParams = new ArrayList();

headerParams.add(xCustomHeader1);

headerParams.add(xCustomHeader2);

return headerParams;

}

}| 1 | Example of adding a custom response with http status code 999. It is important to note that Custom999Response must exist in the DTOs. |

| 2 | Example of specifying 2 custom headers with description schema. |

and so the configuration of the following is added:

mp.openapi.filter=hu.icellmobilsoft.test.rest.filter.CustomerOpenAPIFilter2.7.8. MessageBodyWriter

A module contains application/octet-stream + BaseResultType writert.

This allows the system to send an octet-stream response to any

own DTO BaseResultType object.

This is very useful, for example, when generating a file with an error.

2.7.9. ProjectStage

The module contains a Deltaspike inspired ProjectStage object which can be injected. Its role is to be able to specify at runtime, via configuration, whether the project is running in production, development or test mode.

It can be used by specifying 2 configurations:

-

coffee.app.projectStage

-

org.apache.deltaspike.ProjectStage

The values that can be specified are converted to hu.icellmobilsoft.coffee.rest.projectstage.ProjectStageEnum.

Each enum value contains which config value represents which enum.

| It is important to point out that if no config value is specified, or if no config value is found in the list of one of the enum names, they behave as PRODUCTIONs in ProjectStage! |

Configurations can be specified from multiple locations using Microprofile Config, but only the first one in the order described above will be considered.

Currently, in the project, if the ProjectStage value is not Production, the system will return a broader response for errors.

Using this works as follows:

@Dependent

public class MyBean {

private @Inject ProjectStage projectStage;

public void fn() {

if (projectStage.isProductionStage()) {

// do some production stuff...

}

}

}For possible further breakdowns, use as follows:

@Dependent

public class MyBean {

private @Inject ProjectStage projectStage;

public void fn() {

if (projectStage.getProjectStageEnum() == ProjectStageEnum.DEVELOPMENT) {

// do some development stuff...

}

}

}2.7.10. Jsonb configuration

The default implementor of Jsonb is Eclipse Yasson. This can be changed using default configurations:

coffee:

jsonb:

config:

propertyvisibilitystrategyclass: "en.icellmobilsoft.coffee.rest.provider.FieldOnlyVisibilityStrategy" (1)

binarydatastrategy: "BASE_64" (2)In the above configuration, 2 elements can be set:

| 1 | full access to the class implementing the interface PropertyVisibilityStrategy in jakarta.json.bind.config.PropertyVisibilityStrategy. |

| 2 | This type can take the values of the enum BinaryDataStrategy in jakarta.json.bind.config.BinaryDataStrategy to determine how binary data is handled. |

2.8. coffee-grpc

The purpose of this module is to support gRPC communication and handling.

2.8.1. coffee-grpc-api

A collector for general gRPC handling of the Coff:ee API (annotations, version, …).

2.8.2. coffee-grpc-base

A collector for general Protobuf and gRPC classes. It includes exception handling, status handling, and other general CDI (Contexts and Dependency Injection) and Coff:ee functionalities.

A generic ExceptionMapper interface following the JAX-RS pattern. It allows converting a specific Exception type to a gRPC Status using the capabilities provided by CDI.

2.8.3. coffee-grpc-protoc

A helper tool used for proto → class generation. The logic utilizes the Mustache template system, which will be present in the com.salesforce.jprotoc.ProtocPlugin system.

Example usage in a pom.xml:

<build>

<plugins>

<plugin>

<groupId>com.github.os72</groupId>

<artifactId>protoc-jar-maven-plugin</artifactId>

<configuration>

...

<outputTargets>

...

<outputTarget>

<type>grpc-coffee</type>

<pluginArtifact>hu.icellmobilsoft.coffee:coffee-grpc-protoc:${version.hu.icellmobilsoft.coffee}</pluginArtifact>

</outputTarget>

</outputTargets>

</configuration>

...

</plugin>

</plugins>

</build>A more complex example can be found in the backend-sampler project’s pom.xml.

2.8.4. coffee-grpc-server-extension

Module containing a CDI-compatible implementation of a gRPC server.

It reads all classes implementing IGrpcService and delegates them to the gRPC service through the GrpcServerManager.

Implemented features:

-

gRPC server configuration based on NettyServerBuilder

-

MDC (Mapped Diagnostic Context) handling

-

Request/Response log, applicable with LogSpecifier annotation on GRPC service implementation method/class

-

Exception handling

-

Grpc status code mapping

-

General exception:

hu.icellmobilsoft.coffee.grpc.server.mapper.GrpcGeneralExceptionMapper -

BaseException exception:

hu.icellmobilsoft.coffee.grpc.server.mapper.GrpcBaseExceptionMapper

-

-

Grpc header response additions:

-

Business error code (

com.google.rpc.ErrorInfo) -

Business error code translation by request locale (

com.google.rpc.LocalizedMessage) -

Debug informations (

com.google.rpc.DebugInfo)

-

-

Server thread pool

The thread handling is an important part of the gRPC server. Two solutions have been implemented:

-

ThreadPoolExecutor- default thread pool:-

Configurable through the

coffee.grpc.server.threadpool.defaultconfiguration.

-

-

ManagedExecutorService- Jakarta EE managed thread pool:-

A thread pool managed by the server, with context propagation support.

-

coffee:

grpc:

server:

port: 8199 # default 8199

maxConnectionAge: 60000000 # nano seconds, default Long.MAX_VALUE

maxConnectionAgeGrace: 60000000 # nano seconds, default Long.MAX_VALUE

maxInboundMessageSize: 4194304 # Bytes, default 4 * 1024 * 1024 (4MiB)

maxInboundMetadataSize: 8192 # Bytes, default 8192 (8KiB)

maxConnectionIdle: 60000000 # nano seconds, default Long.MAX_VALUE

keepAliveTime: 5 # minutes, default 5

keepAliveTimeout: 20 # seconds, default 20

permitKeepAliveTime: 5 # minutes, default 5

permitKeepAliveWithoutCalls: false

# default false

threadPool:

default:

corePoolSize: 64 # default 32

maximumPoolSize: 64 # default 32

keepAliveTime: 60000 # milliseconds, default 0

jakarta:

active: true # default false (1)| 1 | if true, then coffee.grpc.server.threadpool.default is ignored. |

2.8.5. gRPC client (coffee-grpc-client-extension)

It includes support for implementing a gRPC client. This includes:

-

Configuration management

-

Request logging

-

Response logging

coffee:

grpc:

client:

_configKey_:

host: localhost # default localhost

port: 8199 # default 8199

maxInboundMetadataSize: 8192 # Bytes, default 8192 (8KiB)@Inject

@GrpcClient(configKey = "_configKey_") (1)

private DummyServiceGrpc.DummyServiceBlockingStub dummyGrpcService; (2)

...

// add header

DummyServiceGrpc.DummyServiceBlockingStub stub = GrpcClientHeaderHelper

.addHeader(dummyGrpcServiceStub, GrpcClientHeaderHelper.headerWithSid(errorLanguage)); (3)

// equivalent with `stub.getDummy(dummyRequest);` + exception handling

DummyResponse helloResponse = GrpcClientWrapper.call(stub::getDummy, dummyRequest); (4)

...| 1 | Configuration key for connection parameters (e.g., server host and port) |

| 2 | Generated service Stub |

| 3 | Add custom header |

| 4 | gRPC service call + exception handling |

2.8.6. gRPC Metrics

The gRPC server and client can optionally activate interceptors to provide metric data. For this, only the inclusion of the Maven dependency is required:

<dependency>

<groupId>hu.icellmobilsoft.coffee</groupId>

<artifactId>coffee-grpc-server-extension</artifactId>

</dependency>

<dependency>

<groupId>hu.icellmobilsoft.coffee</groupId>

<artifactId>coffee-grpc-metrics-mpmetrics</artifactId>

</dependency><dependency>

<groupId>hu.icellmobilsoft.coffee</groupId>

<artifactId>coffee-grpc-client-extension</artifactId>

</dependency>

<dependency>

<groupId>hu.icellmobilsoft.coffee</groupId>

<artifactId>coffee-grpc-metrics-mpmetrics</artifactId>

</dependency>If the metric module is not included at the dependency level, the server/client operation remains unchanged, only metric data is not provided.

Provided metrics:

-

gRPC server

-

Received request counter

-

Responded response counter

-

Request-response processing per second

-

-

gRPC Client

-

Sent request counter

-

Responded response counter

-

Request-response processing per second

-

2.8.7. gRPC Tracing

The gRPC server and client can optionally activate interceptors to provide tracing data. For this, only the inclusion of the Maven dependency is required:

<dependency>

<groupId>hu.icellmobilsoft.coffee</groupId>

<artifactId>coffee-grpc-server-extension</artifactId>

</dependency>

<dependency>

<groupId>hu.icellmobilsoft.coffee</groupId>

<artifactId>coffee-grpc-tracing-opentracing</artifactId>

</dependency><dependency>

<groupId>hu.icellmobilsoft.coffee</groupId>

<artifactId>coffee-grpc-server-extension</artifactId>

</dependency>

<dependency>

<groupId>hu.icellmobilsoft.coffee</groupId>

<artifactId>coffee-grpc-tracing-telemetry</artifactId>

</dependency><dependency>

<groupId>hu.icellmobilsoft.coffee</groupId>

<artifactId>coffee-grpc-client-extension</artifactId>

</dependency>

<dependency>

<groupId>hu.icellmobilsoft.coffee</groupId>

<artifactId>coffee-grpc-tracing-opentracing</artifactId>

</dependency><dependency>

<groupId>hu.icellmobilsoft.coffee</groupId>

<artifactId>coffee-grpc-client-extension</artifactId>

</dependency>

<dependency>

<groupId>hu.icellmobilsoft.coffee</groupId>

<artifactId>coffee-grpc-tracing-telemetry</artifactId>

</dependency>If the tracing module is not included at the dependency level, the server/client operation remains unchanged, only tracing data is not provided.

2.8.8. coffee-dto/coffee-dto-xsd2proto

A collector of generated schema2proto for general XSD descriptors (coffee-dto-xsd module) and other manually created proto files. This package serves to use Coff:ee proto files, so projects don’t need to generate them again.

Unfortunately, the used schema2proto plugin is not compatible with the Windows operating system, so automatic compilation generation is not set. If there are any changes to the XSD files, the following command needs to be executed on a Linux-compatible system:

mvn clean install -Dschema2proto -Dcopy-generated-sourcesThe schema2proto parameter activates XSD → proto generation, and the copy-generated-sources parameter activates copying the generated proto files into the sources. Afterward, the changes will appear in the git diff.

2.8.9. coffee-dto/coffee-dto-stub-gen

Contains all Coff:ee proto files and their generated classes. The plugin generates an interface descriptor that can be implemented in a full CDI environment. It also generates a BindableService implementation that delegates gRPC calls to the implemented interface.

3. Coffee model

A model module can have several submodules containing database tables for a specific purpose.

3.1. coffee-model-base

The purpose of this module is to define the base table structure.

Each table must contain common ID, AUDIT and VERSION columns. It provides ancestor classes, id generator and audit field loader for these. It includes the inclusion of deltaspike DATA to facilitate the criteria API, and maven also instantiates SingularAttribute classes on these ancestors.

3.1.1. ID generation

The purpose of ID generation is to work with unique and non-sequential ID values (strings) under all circumstances.[1] For generation, we use the EntityIdGenerator.java class, this is used by default with annotation on the entity java class, but if needed it can be called directly with the generate() method. Operationally, the generate method will generate a new ID for the entity if the ID value is not already set (null), otherwise it will use the existing ID.

The algorithm will generate an ID of up to 16 lengths [0-9a-zA-Z] using random characters, taking into account the nanosecond part of the current time, e.g. '2ZJMG008YRR4E5NW'

Example code for an identifier used on the AbstractIdentifiedEntity.java class:

@Id

@Column(name = "X__ID", length = 30)

@GenericGenerator(name = "entity-id-generator", strategy = "hu.icellmobilsoft.coffee.model.base.generator.EntityIdGenerator")

@GeneratedValue(generator = "entity-id-generator", strategy = GenerationType.IDENTITY)

private String id;Example code for an Entity where an identifier is required:

@Entity

@Table(name = "SIMPLE_TABLE")

public class SimpleEntity extends AbstractIdentifiedEntity {

}We have the option to set the timezone through environment variable or system property. If we don’t set this, we’ll use the system’s timezone by default.

The variable: COFFEE_MODEL_BASE_JAVA_TIME_TIMEZONE_ID

service:

environment:

COFFEE_MODEL_BASE_JAVA_TIME_TIMEZONE_ID: Europe/Budapest

java -Dcoffee.model.base.java.time.timezone.id=Europe/Budapest

System.setProperty("coffee.model.base.java.time.timezone.id","Europe/Budapest");

3.1.2. Audit columns

The audit columns are used to track all table-level movements, e.g. insertion or modification of a new record.

The columns used for this purpose are separated.

Items tracking insertions are stored in columns X__INSDATE and X__INSUSER and the value of these fields is only stored in case of an insert, not in case of an update.

The elements used for modification are stored in columns X__MODDATE and X__MODUSER.

These are predefined in the AbstractAuditEntity.java class and are automatically loaded using the appropriate annotation (e.g. @CreatedOn, @ModifiedOn)

Example code for an Entity where audit elements are required:

@Entity

@Table(name = "SIMPLE_TABLE")

public class SimpleEntity extends AbstractAuditEntity {

}3.1.3. Version column

The version column is a strictly technical element defined in the AbstractEntity.java class. It is used by Hibernate via @Version annotation, ensuring that during a merge operation the entity can remain intact using optimistic lock concurrency control.

It is recommended to extend each entity class with the AbstractIdentifiedAuditEntity.java class so that it will already contain the ID, AUDIT and VERSION columns

|

@Entity

@Table(name = "SIMPLE_TABLE")

public class SimpleEntity extends AbstractIdentifiedAuditEntity {

}3.2. coffee-model-security

The purpose of this module is to provide a generic privilege management and collection of related entities.

Based on the logic of the different entitlement systems in previous projects, the following have been collected entity classes of the basic tables. A project is free to use them independently, therefore there is no relationship between entities, so as not to limit the possible combinations.

4. Coffee modules

4.1. coffee-module-activemq

The purpose of this module is to connect to Apache ActiveMQ.

Contains various server classes and methods for JMS management

4.2. coffee-module-csv

Module to generate a CSV file from Java beans using binding annotations, or parse a CSV file to produce beans.

The bean annotation looks like this:

public class TestBean {

@CsvBindByNamePosition(position = 0, column = "IDENTIFIER")

private long id;

@CsvBindByNamePosition(position = 4)

private String name;

@CsvBindByNamePosition(position = 2)

private boolean active;

@CsvDate("yyyy-MM-dd")

@CsvBindByNamePosition(position = 3)

private LocalDate creationDate;

@CsvBindByNamePosition(position = 1)

private status status;

// getters, setters...

}The module provides a @CsvBindByNamePosition annotation to specify the position and name of a CSV column.

A list of instances of such a class can be converted to CSV with default csv format use the following call:

String csv = CsvUtil.toCsv(beans, TestBean.class);The result of the above call:

"IDENTIFIER"; "STATUS"; "ACTIVE"; "CREATIONDATE"; "NAME"

"11";"IN_PROGRESS";"true";"2021-11-23";"foo"

"12";"DONE";"false";"2020-01-02";"bar"If you want to change the csv format use the overloaded methods with config:

CsvWriterConfig csvWriterConfig = new CsvWriterConfig.Builder()

.withQuotechar('\'')

.withSeparator(',')

.build();

String csv = CsvUtil.toCsv(beans, TestBean.class, csvWriterConfig);The result of the above call:

'IDENTIFIER','STATUS','ACTIVE','CREATIONDATE','NAME'

'11','IN_PROGRESS','true','2021-11-23','foo'

'12','DONE','false','2020-01-02','bar'To convert backwards, the following call can be used for default csv format:

List<TestBean> beans = CsvUtil.toBean(csv, TestBean.class);To convert backwards, the following call can be used for custom csv format:

CSVParserBuilder csvParserBuilder = new CSVParserBuilder()

.withSeparator(',')

.withQuoteChar('\'');

List<TestBean> beans = CsvUtil.toBean(csv, TestBean.class, csvParserBuilder);4.2.1. Disambiguation

The field spacing in which we want to languageize the values must be specified in the

LocalizationConverter , which can be done with annotations starting with @CsvCustomBind:

@CsvCustomBindByNamePosition(position = 0, converter = LocalizationConverter.class)

private status status;

@CsvCustomBindByNamePosition(position = 1, converter = LocalizationConverter.class)

private boolean active;

If you also want to localize custom types, or want to localize managed types

If you want to use localization types or change the logic of managed types, you can do so by deriving from LocalizationConverter.

|

Then we need to specify the language for the columns and the values of the fields we want to annotate

(e.g. in the messages_en.properties file):

java.lang.Boolean.TRUE=Yes

java.lang.Boolean.FALSE=No

hu.icellmobilsoft.coffee.module.csv.LocalizedTestBean.status=Status

hu.icellmobilsoft.coffee.module.csv.LocalizedTestBean.active=Active

hu.icellmobilsoft.coffee.module.csv.LocalizedTestBean$Status.IN_PROGRESS=Progress

hu.icellmobilsoft.coffee.module.csv.LocalizedTestBean$Status.DONE=DoneFinally, you need to call the CsvUtil#toLocalizedCsv method with the selected language:

String csv = CsvUtil.toLocalizedCsv(beans, TestBean.class, "en");The code in the examples will result in the following CSV:

"Status"; "Active"

"Progress"; "Yes"

"Done"; "No"4.3. coffee-module-document

The purpose of this module is to store and manage template texts. So if you have a SMS, Email, PDF or other text to be filled with variable parameters in different languages, this module will handle it.

It contains a generic DTO submodule (optional) in which are predefined the communication objects. The working principle is to input the code of the template, key-value pairs of parameters to be filled in, it is substituted into the template, and return it in response. If not all the required parameters are entered, it loads the default values, which are also stored in the module. The module is also capable of saving files, but this is of limited use because it saves them to a database.

4.4. coffee-module-etcd

Module to manage ETCD, implement Microprofile ConfigSource

Functioning of the official

<dependency>

<groupId>io.etcd</groupId>

<artifactId>jetcd-core</artifactId>

<version>0.7.5</version>

</dependency>based driver, extended with enterprise usage model options. More precise description on separate page

4.4.1. Deploying configurations, configuring ETCD host

To deploy ETCD configurations, the coffee-module-etcd module is pulled in as a dependency.

This will provide the auxiliary classes needed for configuration management,

and contains an EtcdConfig implementation to define it,

that the coffee.etcd.default.url property included in the configuration will be the ETCD’s reachability.

With the help of the following properties, additional optional configurations are possible for establishing the ETCD connection:

-

coffee.etcd.default.connection.timeout.millis: connection timeout in milliseconds -

coffee.etcd.default.retry.delay: retry delay -

coffee.etcd.default.retry.max.delay: maximum retry delay -

coffee.etcd.default.keepalive.time.seconds: keepalive time in seconds -

coffee.etcd.default.keepalive.timeout.seconds: keepalive timeout in seconds -

coffee.etcd.default.keepalive.without.calls: keepalive without calls (true/false) -

coffee.etcd.default.retry.chrono.unit: retry period unit -

coffee.etcd.default.retry.max.duration.seconds: maximum retry duration in seconds -

coffee.etcd.default.wait.for.ready: enable gRPC wait for ready semantics (true/false)

On the backend side, there are several ways to manage configurations. These typically support and complement each other.

4.4.2. Microprofile-config

The microprofile-config annotation can be used to inject specific configuration values.

In order for microprofile-config to detect ETCD storage,

in our code, we need to activate the coffee-module-etcd in

ConfigSource implementation in the code of your code:

-

hu.icellmobilsoft.coffee.module.etcd.producer.DefaultEtcdConfigSource- default config source -

hu.icellmobilsoft.coffee.module.etcd.producer.CachedEtcdConfigSource- cached config source. The cache will remember the retrieved value for 30 minutes, even if the ETCD does not contain it, so we can reduce a lot of repetitive queries. Cache is a thread safe singleton, it is possible to clear it by callingEtcdConfigSourceCache.instance().clear(). -

hu.icellmobilsoft.coffee.module.etcd.producer.RuntimeEtcdConfigSource- Can be activated during runtime as needed (e.g., AfterBeanDiscovery) as followsRuntimeEtcdConfigSource.setActive(true); -

hu.icellmobilsoft.coffee.module.etcd.producer.FilteredEtcdConfigSource- Decides whether to search for the key in ETCD based on configuration regex pattern parameterscoffee: configSource: FilteredEtcdConfigSource: pattern: include: ^(public|private)\\. exclude: ^private\\.The example works with the following logic:

-

exclude- is at the beginning of the processing order, so it will be evaluated beforeinclude. Optional. If not specified, filtering will not be activated, allowing all keys to pass through. If the searched key matches the pattern, it will not search in ETCD. -

include- optional. If not specified, filtering will not be activated, allowing all keys to pass through. It will search for the key in ETCD only if the searched key matches the pattern. -

Patterns

-

"private.sample.key1" - it will not search for the key in ETCD because the

excludepattern filters it out. -

"public.sample.key2" - it will search for the key in ETCD because the

excludepattern allows it and theincludepattern matches. -

"org.sample.key3" - it will not search for the key in ETCD because the

excludepattern allows it but theincludepattern filters it out.

-

-

Example ConfigSource activation:

hu.icellmobilsoft.coffee.module.etcd.producer.CachedEtcdConfigSourceThe priority of ConfigSources is set to 150.

It is possible to inject String, Integer, Boolean, Long, Float and Double configurations. The ETCD always stores String, the parsing to the desired type is done after reading the value. The mechanism uses ConfigEtcdHandler in the background to read the values. See configuration module

4.4.3. ConfigEtcdHandler class

Provides a way to read and write ETCD configuration values in a context. It uses ConfigEtcdService in the background.

@Inject

private ConfigEtcdHandler configEtcdHandler;

...

configEtcdHandler.putValue("public.email.sender", "noreply@sample.teszt.hu");@Inject

private ConfigEtcdHandler configEtcdHandler;

...

String adminEmail = configEtcdHandler.getValue("public.email.sender");Reference to another configuration

ConfigEtcdHandler, and thus indirectly ConfigurationHelper and the @ConfigProperty annotation, also allow it, the value of one config to refer to another config. In this case, { and } characters to specify the referenced configuration.

@Inject

private ConfigEtcdHandler configEtcdHandler;

...

configEtcdHandler.putValue("protected.iop.url.main", "http://sample-sandbox.hu/kr_esb_gateway/services/IOPService?wsdl");

configEtcdHandler.putValue("protected.iop.url.alternate", "http://localhost:8178/SampleMockService/IOPService2?wsdl");

configEtcdHandler.putValue("public.iop.url", "{protected.iop.url.main}");

String contactEmail = configEtcdHandler.getValue("public.iop.url"); //A return value "http://sample-sandbox.hu/kr_esb_gateway/services/IOPService?wsdl"The reference must strictly refer to a specific other configuration, no other content is allowed. For example, the embedded reference will not be resolved (http://{other.etcd.conf}:8178/SampleMockService/IOPService2?wsdl).

4.4.4. ConfigEtcdService class

Provides the ability to query, write, list, search for configuration values. The lowest class of those listed. All of the above mechanisms work through this implement their functionality. Presumably you will only need to use it if you delete it, list configurations.

@Inject

private ConfigEtcdService configEtcdService;

...

configEtcdService.putValue("protected.iop.url.main", "http://sample-sandbox.hu/kr_esb_gateway/services/IOPService?wsdl"); //write

String senderEmail = configEtcdService.getValue("protected.iop.url.main"); //read

configEtcdService.delete("protected.iop.url.main"); //delete@Inject

private ConfigEtcdService configEtcdService;

...

Map<String, String> allConfigMap = configEtcdService.getList(); //list all configuration

Map<String, String> publicConfigMap = configEtcdService.searchList("public."); //list configurations with a given prefix key (cannot be an empty String)When requesting or deleting a non-existent configuration, the service throws a BONotFoundException. Since this mechanism is used by all listed options, this is true for all of them.

4.4.5. Namespaces, configuration naming conventions

The configuration handler does not support separate namespaces, all information stored in etcd is accessible.

Each configuration key starts with a visibility prefix. They are managed according to the following conventions:

| Prefix | Description |

|---|---|

|

Only the configuration available to the backend |

|

Accessible for both backend and frontend, frontend read-only configuration |

|

A configuration available to both backend and frontend, frontend can change its value |

4.4.6. Configuration management using Command Line Tool

Download and unpack the ETCD package for your system: https://github.com/coreos/etcd/releases/

Set the ETCDCTL_API environment variable to 3:

#Linux

export ETCDCTL_API=3

#Windows

set ETCDCTL_API=3From the command line, you can use etcdctl to read and write the values in the ETCD configuration:

#Read the whole configuration

etcdctl --endpoints=%ETCD_ENDPOINTS% get "" --from-key

#Read the value of a given configuration

etcdctl --endpoints=%ETCD_ENDPOINTS% get private.sample

#Write the value of a given configuration

etcdctl --endpoints=%ETCD_ENDPOINTS% put private.sample ertek4.4.7. Logging

The retrieved keys and the resulting values are logged unless the key matches the regular expression [\w\s]*?secret[\w\s]*? or [\w\s]*?pass[\w\s]*?, in which case the value is masked and logged.

The default regex can be overridden by specifying coffee.config.log.sensitive.key.pattern in one of the default microprofile-config sources (sys var, env var, META-INF/microprofile-config.properties), multiple patterns can be specified separated by commas.

4.4.8. microprofile-health támogatás

The EtcdHealth can check if the etcd server is reachable.

@ApplicationScoped

public class EtcdHealthCheck {

@Inject

private EtcdHealth etcdHealth;

public HealthCheckResponse check() {

try {

return etcdHealth.checkConnection("etcd");

} catch (BaseException e) {

return HealthCheckResponse.builder().name("etcd").up().build();

}

}

@Produces

@Startup

public HealthCheck produceEtcdCheck() {

return this::check;

}

}4.5. coffee-module-localization

4.5.1. Localization

Localization functionality in a backend system is useful from several perspectives, for example error codes, enum translations or even language-agnostic generation of documents. For this purpose, deltaspike Messages and i18n which is still being enhanced with newer CDI features.

It has three components:

-

language detection (LocaleResolver)

-

language files

-

language localization manager (LocalizedMessage)

Language (LocaleResolver)

By default, deltaspike includes a built-in language resolver,

which returns the running JVM locale,

of course this is not appropriate for a system,

so you have to use CDI to create @Alternative like this:

import java.util.Locale;

import javax.annotation.Priority;

import javax.enterprise.context.Dependent;

import javax.enterprise.inject.Alternative;

import javax.inject.Inject;

import javax.interceptor.Interceptor;

import org.apache.commons.lang3.StringUtils;

import org.apache.deltaspike.core.impl.message.DefaultLocaleResolver;

import hu.icellmobilsoft.coffee.cdi.logger.AppLogger;

import hu.icellmobilsoft.coffee.cdi.logger.ThisLogger;

import hu.icellmobilsoft.project.common.rest.header.ProjectHeader;

@Dependent

@Alternative